Part 3: Transformations, Instancing, Multisampling & Distribution

For the third part, a lot of new features are introduced to the ray tracer.

1. Transformations

In order to implement transformations, firstly I implemented a Matrix4f class which is able to support basic matrix operations such as inverse, transpose, multiplication with another matrix or a vector etc. Three types of transformations which are Translation, Rotation and Scaling are supported in this new version of the ray tracer.

While parsing the scene, final Transformation Matrices are stored for each object, which will be used for transforming Rays, Hits and BoundingBoxes later. For meshes, the transformation matrix is stored only for the main mesh object, not for all of its children triangles. In addition, for transforming bounding boxes, transformations are applied to all 8 corners of the bounding box and the new bounding box is computed based on these new corners, instead of applying the transformations directly to the min and max corners.

To intersect rays with the transformed objects, the rays are transformed to object's local space by applying the inverse of the object's transformation matrix. If the ray hits the object, the intersection point and the normal at that point are also transformed to world coordinates. While transforming normals, inverse transpose of the transformation matrix is applied.

2. Instancing

Actually, I found this topic the most complicated one among all, since for a long time I could not decide how to handle Meshes and MeshInstances. Finally, I decided to perform the following:

- For the meshes defined in xml, I stored a Mesh object with its corresponding transformation matrix. The bounding volume hierarchy for the mesh is constructed without applying these transformations.

- For the mesh instances defined in xml, I stored a MeshInstance object which is implemented as a separate class. This MeshInstance's BVH is set directly to its Base Mesh's BVH computed without any transformations. The transformations specified for the MeshInstance is also stored. If the resetTransform attribute is set for this instance, its transformation matrix consists of only the transformations specified for this MeshInstance. Otherwise, its transformations are applied on top of the existing ones of the base mesh.

For this part, I also needed to apply some changes to my previous implementation of BVH. In the previous version of the ray tracer, I implemented BVH in a way that only spheres and triangles are stored in the leaf nodes, meaning that instead of storing mesh objects, I stored the triangles of that mesh in the BVH tree. In this version, in order to support instancing, I decided to change that and store the mesh objects directly. For each mesh, a separate BVH is kept which is constructed from its triangles.

3. Multisampling

For multisampling, Jittered Sampling is used to sample points inside the pixels. In order to obtain the final RGB value for a pixel, Box Filtering is applied simply by taking average of the RGB values obtained for the sample points.

While implementing multisampling, I was not sure about when to clamp the pixel values, I could not decide whether I should apply it to the final value found for that pixel or to each value obtained from each sample ray for that pixel. It made more sense to me to let the values accumulate and apply the clamping to the final values. Luckily, this approach turned out to be true :)

4. Distribution Ray Tracing

Distribution ray tracing helps simulating various visual effects without adding much cost to that is already paid for multisampling. Under this topic, the following visual effects are implemented:

4.1 Depth of Field

Until now, only the Pinhole Camera model was supported where all objects in the scene are in sharp focus. In order to create a real camera effect which allows to focus only a part of the scene through a lens placed in the aperture, parameters such as ApertureSize and FocusDistance are added to the camera model.

4.2 Glossy Reflections (Imperfect Mirrors)

In order to be able to simulate metallic objects that are not polished such as brushed metals, materials are upgraded with a Roughness parameter. With randomness involved, the reflection rays start to diverge from the perfect reflection direction creating an imperfect mirror effect.

4.3 Motion Blur

In order to create a motion blur effect, a Motion Vector is defined for the related object in the input file. In general, motion can be an arbitrary transformation, but in this ray tracer, only the translational transformations are supported for motion blur. For simulating motion blur effect, each ray is associated with a random time parameter sampled between 0 and 1, where 0 corresponds to the initial position of the object while 1 corresponds to the final position of the object. It is important to note that this time parameter is randomly generated only for the primary rays. All other rays such as shadow, reflection and refraction rays derive this parameter from its parent primary ray.

4.4 Area Lights & Soft Shadows

Up to now, the ray tracer was only supporting PointLights. In order to create soft shadow effects, AreaLights are introduced in this version. Instead of using a constant point as the light position, many points are randomly sampled on this area light to cause softer shadow transitions when merged with multisampling. As a side note, area lights are still not visible in the images just like the point lights.

5. Quad Face Parsing

Until now, the input scenes were only consisted of Triangular Meshes, but there are lots of advanced mesh objects that are composed of quads instead of triangles. In order to support these objects as well, parsing of Quad Meshes is handled simply by dividing a quad into two triangles and treating the quad mesh as a triangular mesh as before.

As a final note, in the second version of the ray tracer, I discarded the multi-threading implementation I used in the first version. Because, I thought that introducing BVH was going to solve all my problems related to rendering time. So naive of me :). When more advanced topics such as multisampling are added to the ray tracer, the rendering time of the images increased linearly with respect to the number of samples. In order to improve rendering times, again, I included the multi-threading approach I previously used.

Problems & Fixes

During the implementation of these topics described above, I encountered many problems. Some of them are described in details below.

As a first problem, while implementing transformations, I discovered that my rotation matrices were wrong, because I was converting the rotation angle from degrees to radians twice by accident, once while parsing and once while computing the rotation matrix. Moreover, I forgot to transform the bounding box of the mesh which resulted in the following image.

While calculating diffuse and specular shading values for area lights, I realized that I was using wi as the vector going from the sample point on the area light to the intersection point. Instead, I should have used -wi for that. This resulted with a very interesting image which can be seen below.

In the previous version, I thought that the refraction and reflection parts work perfectly, at least for the provided scenes. When I tried to render metal_glass_plates.png, I noticed that I was not using the values calculated through Beer's Law correctly. Instead of multiplying the value obtained with both the reflection and refraction colors, I was only multiplying it with the reflection color. The whole point of the absorption coefficient used in Beer's Law being a vector instead of a scalar value was to be able to induce color on dielectric materials. When this value is not used correctly, the glass objects absorbed all color channels equally and the green effect could not be simulated.

For the cornellbox scene with the area light, everything was fine except the part on the ceiling where the area light was reflected. I realized that the angle between the l vector and the normal of the area light's surface was greater than 90 degrees, because the normal was always assumed to point downwards. The cos value calculated for this angle was negative. In order to avoid this, I used the absolute value of this cos value.

Finally, for distribution ray tracing, spheres_dof.png and cornellbox_area.png images seem a little bit noisy around the area light reflection on the ceiling and around the edges of the soft shadows. I tried to increase the number of samples for both of the images, and this solved the noise problem. However, for the provided number of samples, there is still a little bit noise for both of these images. The versions rendered with 900 samples for these images are as the following.

|

| cornellbox_area.png with 900 samples |

|

| spheres_dof.png with 900 samples |

Resulting Images

|

| simple_transform.png (800x800) Pure BVH: 254 milliseconds 376 microseconds BVH + 8 threads: 84 milliseconds 362 microseconds |

|

| ellipsoids.png (800x800) Pure BVH: 969 milliseconds 355 microseconds BVH + 8 threads: 321 milliseconds 267 microseconds |

|

| spheres_dof.png (800x800) Pure BVH: 1 minute 34 seconds 815 milliseconds 794 microseconds BVH + 8 threads: 22 seconds 661 milliseconds 248 microseconds |

|

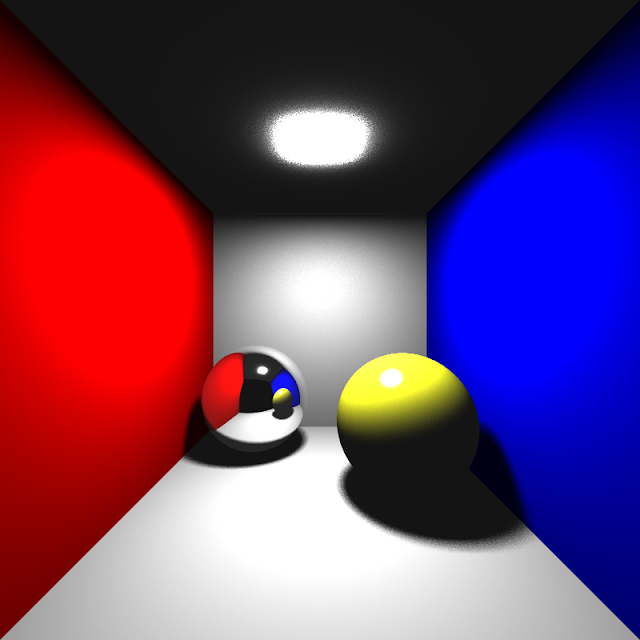

| cornellbox_brushed_metal.png (800x800) Pure BVH: 6 minutes 56 seconds 922 milliseconds 337 microseconds BVH + 8 threads: 1 minute 43 seconds 45 milliseconds 998 microseconds |

|

| cornellbox_area.png (800x800) Pure BVH: 1 minute 53 seconds 521 milliseconds 865 microseconds BVH + 8 threads: 27 seconds 98 milliseconds 61 microseconds |

|

| cornellbox_boxes_dynamic.png (750x600) Pure BVH: 18 minutes 43 seconds 624 milliseconds 499 microseconds BVH + 8 threads: 4 minutes 15 seconds 839 milliseconds 668 microseconds |

|

| metal_glass_plates.png (800x800) Pure BVH: 5 minutes 29 seconds 246 milliseconds 638 microseconds BVH + 8 threads: 59 seconds 803 milliseconds 655 microseconds |

|

| dragon_dynamic.png (800x480) Pure BVH: 53 minutes 45 seconds 154 milliseconds 834 microseconds BVH + 8 threads: 19 minutes 16 seconds 839 milliseconds 659 microseconds |

Comments

Post a Comment